Self-Hosting Infisical with Docker Swarm

This guide will provide step-by-step instructions on how to self-host Infisical using Docker Swarm. This is particularly helpful for those wanting to self-host Infisical on premise while still maintaining high availability (HA) for the core Infisical components. The guide will demonstrate a setup with three nodes, ensuring that the cluster can tolerate the failure of one node while remaining fully operational.Docker Swarm

Docker Swarm is a native clustering and orchestration solution for Docker containers. It simplifies the deployment and management of containerized applications across multiple nodes, making it a great choice for self-hosting Infisical. Unlike Kubernetes, which requires a deep understanding of the Kubernetes ecosystem, if you’re accustomed to Docker and Docker Compose, you’re already familiar with most of Docker Swarm. For this reason, we suggest teams use Docker Swarm to deploy Infisical in a highly available and fault tolerant manner.Prerequisites

- Understanding of Docker Swarm

- Bare/Virtual Machines with Docker installed on each VM.

- Docker Swarm initialized on the VMs.

Core Components for High Availability

The provided Docker stack includes the following core components to achieve high availability:- Spilo: Spilo is used to run PostgreSQL with Patroni for HA and automatic failover. It utilizes etcd for leader election of the PostgreSQL instances.

- Redis: Redis is used for caching and is set up with Redis Sentinel for HA. The stack includes three Redis replicas and three Redis Sentinel instances for monitoring and failover.

- Infisical: Infisical is stateless, allowing for easy scaling and replication across multiple nodes.

- HAProxy: HAProxy is used as a load balancer to distribute traffic to the PostgreSQL and Redis instances. It is configured to perform health checks and route requests to the appropriate backend services.

Node Failure Tolerance

To ensure Infisical is highly available and fault tolerant, it’s important to choose the number of nodes in the cluster. The following table shows the relationship between the number of nodes and the maximum number of nodes that can be down while the cluster continues to function:| Total Nodes | Max Nodes Down | Min Nodes Required |

|---|---|---|

| 1 | 0 | 1 |

| 2 | 0 | 2 |

| 3 | 1 | 2 |

| 4 | 1 | 3 |

| 5 | 2 | 3 |

| 6 | 2 | 4 |

| 7 | 3 | 4 |

floor(n/2) + 1, where n is the total number of nodes.

This guide will demonstrate a setup with three nodes, which allows for one node to be down while the cluster remains operational. This fault tolerance applies to the following components:

- Redis Sentinel: With three Sentinel instances, one instance can be down, and the remaining two can still form a quorum to make decisions.

- Redis: With three Redis instances (one master and two replicas), one instance can be down, and the remaining two can continue to provide caching services.

- PostgreSQL: With three PostgreSQL instances managed by Patroni and etcd, one instance can be down, and the remaining two can maintain data consistency and availability.

- Manager Nodes: In a Docker Swarm cluster with three manager nodes, one manager node can be down, and the remaining two can continue to manage the cluster. For the sake of simplicity, the example in this guide only contains one manager node.

Docker Deployment Stack Overview

The Docker stack file used in this guide defines the services and their configurations for deploying Infisical in a highly available manner. The main components of this stack are as follows.-

HAProxy: The HAProxy service is configured to expose ports for accessing PostgreSQL (5433 for the master, 5434 for replicas), Redis master (6379), and the Infisical backend (8080). It uses a config file (

haproxy.cfg) to define the load balancing and health check rules. -

Infisical: The Infisical backend service is deployed with the latest PostgreSQL-compatible image. It is connected to the

infisicalnetwork and uses secrets for environment variables. - etcd: Three etcd instances (etcd1, etcd2, etcd3) are deployed, one on each node, to provide distributed key-value storage for leader election and configuration management.

-

Spilo: Three Spilo instances (spolo1, spolo2, spolo3) are deployed, one on each node, to run PostgreSQL with Patroni for high availability. They are connected to the

infisicalnetwork and use persistent volumes for data storage. -

Redis: Three Redis instances (redis_replica0, redis_replica1, redis_replica2) are deployed, one on each node, with redis_replica0 acting as the master. They are connected to the

infisicalnetwork. -

Redis Sentinel: Three Redis Sentinel instances (redis_sentinel1, redis_sentinel2, redis_sentinel3) are deployed, one on each node, to monitor and manage the Redis instances. They are connected to the

infisicalnetwork.

Deployment instructions

Initialize Docker Swarm on one of the VMs by running the following command

<MANAGER_NODE_IP> with the IP address of the VM that will serve as the manager node. Remember to copy the join token returned by the this init command.On the other VMs, join the Docker Swarm by running the command provided by the manager node

<JOIN_TOKEN> with the token provided by the manager node during initialization.Label the nodes with `node.labels.name` to specify their roles.

Labels on nodes will help us select where stateful components such as Postgres and Redis are deployed on. To label nodes, follow the steps below.Replace

<NODE1_ID>, <NODE2_ID>, and <NODE3_ID> with the respective node IDs.

To view the list of nodes and their ids, run the following on the manager node docker node ls.Copy deployment assets to manager node

Copy the Docker stack YAML file, HAProxy configuration file and example

.env file to the manager node. Ensure that all 3 files are placed in the same file directory.- Docker stack file (rename to infisical-stack.yaml)

- HA configuration file (rename to haproxy.cfg)

- Example .env file (rename to .env)

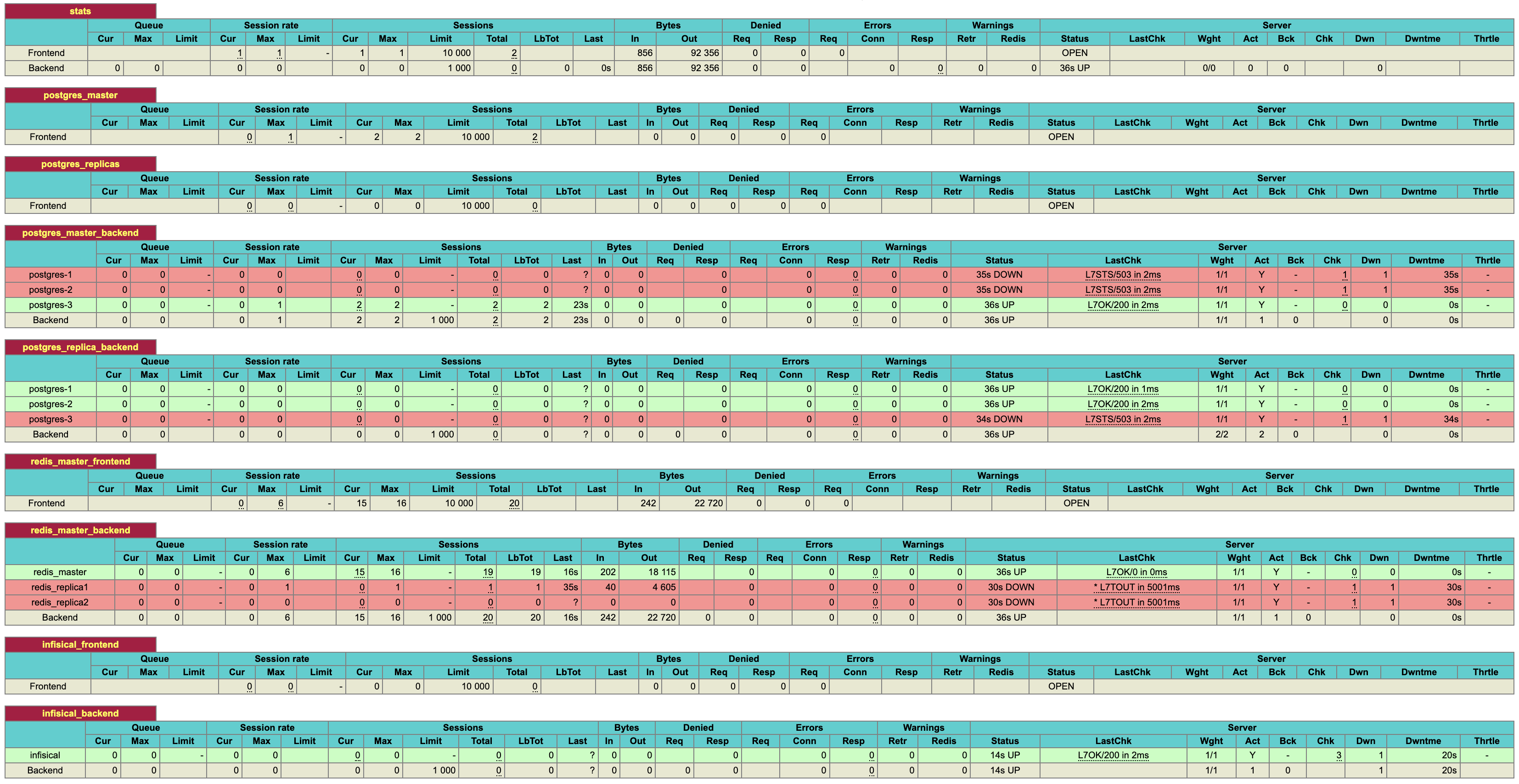

View service status

To view the health of services in your Infisical cluster, visit port

To view the health of services in your Infisical cluster, visit port <NODE-IP>:7001 of any node in your Docker swarm.

This port will expose the HA Proxy stats.Run the following command to view the IPs of the nodes in your docker swarm.The stats page may take 1-2 minutes to become accessible.

FAQ

How do I scale Infisical cluster further?

How do I scale Infisical cluster further?

To further scale and make the system more resilient, you can add more nodes to the Docker Swarm and update the stack configuration accordingly:

- Add new VMs and join them to the Docker Swarm as worker nodes.

-

Update the Docker stack YAML file to include the new nodes in the

deploysection of the relevant services, specifying the appropriatenode.labels.nameconstraints. -

Update the HAProxy configuration file (

haproxy.cfg) to include the new nodes in the backend sections for PostgreSQL and Redis. -

Redeploy the updated stack using the

docker stack deploycommand.

How do I configure backups for Postgres and Redis?

How do I configure backups for Postgres and Redis?

Native tooling for scheduled backups of Postgres and Redis is currently in development.

In the meantime, we recommend using a variety of open-source tools available for this purpose.

For Postgres, Spilo provides built-in support for scheduled data dumps.

You can explore other third party tools for managing db backups, one such tool is docker-db-backup.